Speech and Language

Speaking requires the continuous integration of information as a speech stream evolves. However, temporal cues in speech provide in parallel a rich source of content information. How does the brain exploit this temporal structure to optimally process this type of signal?

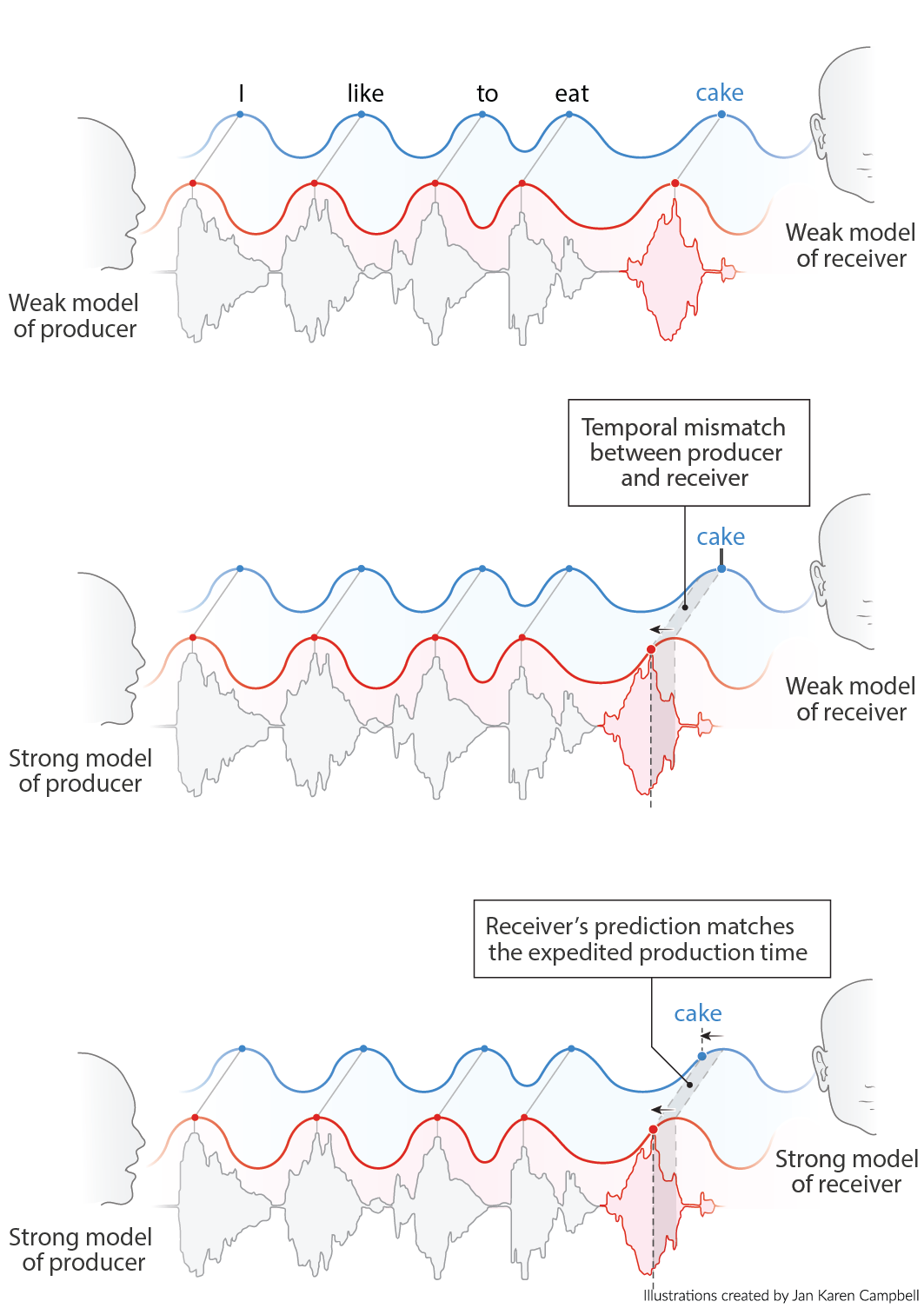

In a recent computational model we propose that oscillations can in parallel track temporal regularities to track information as well as exploit temporal variability to extract coding information. This is possible as speech signals that are predictable (in content) tend to be uttered earlier. The brain can use these temporal regularities to extract information about speech content via the timing.

In this research line we investigate the interaction between tracking and coding as in the research line temporal tracking versus temporal coding but now specifically applied to temporal information in speech and language.

Relevant publications

- Ten Oever, S., Titone, L., te Rietmolen, N., & Martin, A.E. (2024). Phase-dependent word perception emerges from region-specific sensitivity to the statistics of language. Proceedings of the National Academy of Sciences.

- Ten Oever, S., Kaushik, K., & Martin, A.E. (2022). Inferring the nature of linguistic computations in the brain. PLOS Computational Biology.

- Ten Oever, S., Carta, S., Kaufeld, G., & Martin, A.E. (2022). Neural tracking of phrases in spoken language comprehension is automatic and task-dependent. Elife.

- Ten Oever, S. & Martin, A.E. (2021). An oscillating computational model can track pseudo-rhythmic speech by using linguistic predictions. Elife.

- Kaufeld, G., Bosker, H.R., Ten Oever, S., Alday, P.M., Meyer, A.S., & Martin, A.E. (2020). Linguistic structure and meaning organize neural oscillations into a content-specific hierarchy. Journal of Neuroscience.

- Ten Oever, S., Hausfeld, L., Correia, J.M., Van Atteveldt, N., Formisano, E., & Sack, A.T. (2016). A 7T fMRI study investigating the influence of oscillatory phase on syllable representations. NeuroImage.

- Ten Oever, S. & Sack, A.T. (2015). Oscillatory phase shapes syllable perception. Proceedings of the National Academy of Sciences.

- Ten Oever, S., Sack, A.T., Wheat, K.L., Bien, N., & Van Atteveldt, N. (2013). Audio-visual onset differences are used to determine syllable identity for ambiguous audio-visual stimulus pairs. Frontiers in psychology.